A seismic shift is underway in enterprise data centers. SCSI-based, all-flash and hybrid arrays are becoming mainstream in the new generation of software-defined data centers. This is driving enterprise and cloud storage to new levels of performance and causing a reassessment of performance bottlenecks.

Recently, non-volatile memory express (NVMe), a PCI Express (PCIe) standard that is purpose-built for solid-state PCIe modules in servers, has emerged as a new high-performance interface for server-attached flash.

What is NVMe?

- It's a purpose-built protocol for flash storage

- It replaces the SCSI protocol, which is single threaded

- It dramatically reduces latency through parallelism

- Initially, it was limited to PCIe-direct-attached flash storage

Don't be fooled: 90% of today's flash arrays are connecting to Fibre Channel. Currently they have to use SCSI, but in the future NVMe will dominate. To address this issue the NVMe folks have established "NVMe over Fabrics."

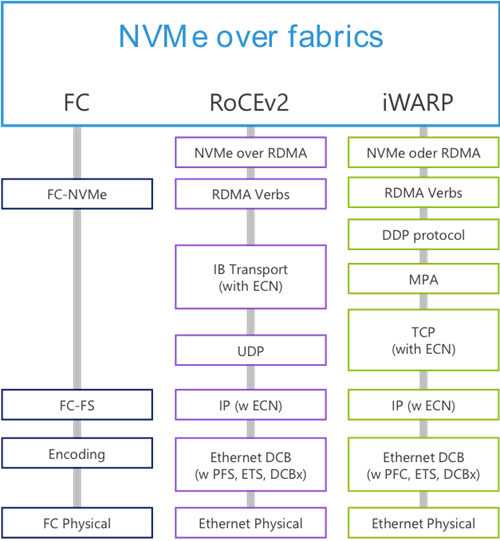

The NVMe over Fabrics specification positions NVMe as a high-performance challenger to SCSI's dominance in the SAN. NVMe over Fabrics maps NVMe over various transport options, including Fibre Channel, InfiniBand, RoCEv2, and iWARP.

NVMe over Fibre Channel offers the performance and robustness of Fibre Channel transport, along with the ability to run FCP and FC-NVMe protocols (in addition to mainframe protocols like FICON) concurrently on the same infrastructure. Such a multi-protocol approach enables IT organizations to transition their storage volumes smoothly from SCSI to NVMe on different flash arrays.

One reason the NVMe protocol is more efficient than SCSI is NVMe's markedly simpler protocol stack. Stack simplicity seems relevant so it's worth taking a minute to look at the protocol stacks of the different NVMe fabrics. The stacks for Fibre Channel, RoCEv2, InfiniBand and iWARP are highlighted below:

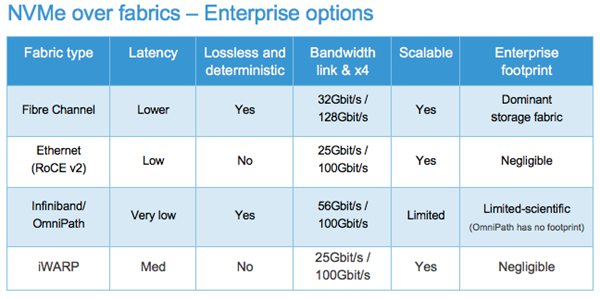

Stack simplicity seems to favor Fiber Channel or InfiniBand. Since the FC stack is more efficient, we should compare the enterprise options and features of using Fiber Channel, Ethernet or InfiniBand.

NVMe over Fibre Channel offers the performance and robustness of Fibre Channel transport, along with the ability to run FC-SCSI and FC-NVMe protocols concurrently on the same infrastructure. Such a dual protocol approach enables IT organizations to transition their storage volumes smoothly from SCSI to NVMe. With NVMe over Fibre Channel, there's no need to rip-and-replace the SAN and no need to create an expensive parallel infrastructure as you begin to adopt NVMe flash.

What's exciting for us at ADVA is the unique position we're in to extend 16Gbit/s and 32Gbit/s Fiber Channel over fiber utilizing our enterprise-optimized "one card fits all" DWDM technology. No other technology is better placed to enable enterprises to maximize the performance of flash-enhanced data storage than our FSP 3000 CloudConnect DCI platform. While other suppliers have built architecture based only on increments of 10Gbit/s, our Fibre Channel cards are leading the industry with the capability to support encrypted 16Gbit/s and 32Gbit/s, while still being backwards compatible with previous Fibre Channel releases.

So please get in touch to find out how we are driving enterprise and cloud storage to new levels of performance and causing a reassessment of performance bottlenecks.